ChatGPT

| |

| Developer(s) | OpenAI |

|---|---|

| Initial release | November 30, 2022 |

| Stable release | July 18, 2024[1]

|

| Engine | |

| Platform | Cloud computing platforms |

| Type | |

| License | Privative service |

| Website | chatgpt |

ChatGPT is a chatbot and virtual assistant developed by OpenAI and launched on November 30, 2022. Based on large language models (LLMs), it enables users to refine and steer a conversation towards a desired length, format, style, level of detail, and language. Successive user prompts and replies are considered at each conversation stage as context.[2]

ChatGPT is credited with starting the AI boom, which has led to ongoing rapid investment in and public attention to the field of artificial intelligence (AI).[3] By January 2023, it had become what was then the fastest-growing consumer software application in history, gaining over 100 million users and contributing to the growth of OpenAI's current valuation of $86 billion.[4][5] ChatGPT's release spurred the release of competing products, including Gemini, Claude, Llama, Ernie, and Grok.[6] Microsoft launched Copilot, initially based on OpenAI's GPT-4. In June 2024, a partnership between Apple Inc. and OpenAI was announced in which ChatGPT is integrated into the Apple Intelligence feature of Apple operating systems.[7] Some observers raised concern about the potential of ChatGPT and similar programs to displace or atrophy human intelligence, enable plagiarism, or fuel misinformation.[8][9]

ChatGPT is built on OpenAI's proprietary series of generative pre-trained transformer (GPT) models and is fine-tuned for conversational applications using a combination of supervised learning and reinforcement learning from human feedback.[8] ChatGPT was released as a freely available research preview, but due to its popularity, OpenAI now operates the service on a freemium model. Users on its free tier can access GPT-4o. The ChatGPT subscriptions "Plus", "Team" and "Enterprise" provide additional features such as DALL-E 3 image generation and increased GPT-4o usage limit.[10]

Training

| Part of a series on |

| Machine learning and data mining |

|---|

ChatGPT is based on particular GPT foundation models, namely GPT-4, GPT-4o and GPT-4o mini, that were fine-tuned to target conversational usage.[11] The fine-tuning process leveraged supervised learning and reinforcement learning from human feedback (RLHF).[12][13] Both approaches employed human trainers to improve model performance. In the case of supervised learning, the trainers played both sides: the user and the AI assistant. In the reinforcement learning stage, human trainers first ranked responses that the model had created in a previous conversation.[14] These rankings were used to create "reward models" that were used to fine-tune the model further by using several iterations of proximal policy optimization.[12][15]

Time magazine revealed that to build a safety system against harmful content (e.g., sexual abuse, violence, racism, sexism), OpenAI used outsourced Kenyan workers earning less than $2 per hour to label harmful content. These labels were used to train a model to detect such content in the future. The outsourced laborers were exposed to "toxic" and traumatic content; one worker described the assignment as "torture". OpenAI's outsourcing partner was Sama, a training-data company based in San Francisco, California.[16][17]

ChatGPT initially used a Microsoft Azure supercomputing infrastructure, powered by Nvidia GPUs, that Microsoft built specifically for OpenAI and that reportedly cost "hundreds of millions of dollars". Following ChatGPT's success, Microsoft dramatically upgraded the OpenAI infrastructure in 2023.[18] Scientists at the University of California, Riverside, estimate that a series of prompts to ChatGPT needs approximately 500 milliliters (18 imp fl oz; 17 U.S. fl oz) of water for Microsoft servers cooling.[19] TrendForce market intelligence estimated that 30,000 Nvidia GPUs (each costing approximately $10,000–15,000) were used to power ChatGPT in 2023.[20][21]

OpenAI collects data from ChatGPT users to train and fine-tune the service further. Users can upvote or downvote responses they receive from ChatGPT and fill in a text field with additional feedback.[22][23]

ChatGPT's training data includes software manual pages, information about internet phenomena such as bulletin board systems, multiple programming languages, and the text of Wikipedia.[24][25][8]

Features and limitations

Features

Although a chatbot's core function is to mimic a human conversationalist, ChatGPT is versatile. It can write and debug computer programs;[26] compose music, teleplays, fairy tales, and student essays; answer test questions (sometimes, depending on the test, at a level above the average human test-taker);[27] generate business ideas;[28] write poetry and song lyrics;[29] translate and summarize text;[30] emulate a Linux system; simulate entire chat rooms; play games like tic-tac-toe; or simulate an ATM.[24]

Compared to its predecessor, InstructGPT, ChatGPT attempts to reduce harmful and deceitful responses.[31] In one example, whereas InstructGPT accepts the premise of the prompt "Tell me about when Christopher Columbus came to the U.S. in 2015" as truthful, ChatGPT acknowledges the counterfactual nature of the question and frames its answer as a hypothetical consideration of what might happen if Columbus came to the U.S. in 2015, using information about the voyages of Christopher Columbus and facts about the modern world—including modern perceptions of Columbus's actions.[12]

ChatGPT remembers a limited number of previous prompts in the same conversation. Journalists have speculated that this will allow ChatGPT to be used as a personalized therapist.[32] To prevent offensive outputs from being presented to and produced by ChatGPT, queries are filtered through the OpenAI "Moderation endpoint" API (a separate GPT-based AI).[33][34][12][32]

In March 2023, OpenAI added support for plugins for ChatGPT.[35] This includes both plugins made by OpenAI, such as web browsing and code interpretation, and external plugins from developers such as Expedia, OpenTable, Zapier, Shopify, Slack, and Wolfram.[36][37]

Limitations

OpenAI acknowledges that ChatGPT "sometimes writes plausible-sounding but incorrect or nonsensical answers".[12] This behavior is common for large language models, and is called "hallucination".[38] The reward model of ChatGPT, designed around human oversight, can be over-optimized and thus hinder performance, in an example of an optimization pathology known as Goodhart's law.[39]

As of May 2024, GPT-4 has knowledge of events that occurred up to December 2023[40] and GPT-4o's knowledge cut-off is October 2023.[41] Paid subscriptions enable ChatGPT to search the web for real-time data.[42]

Training data also suffers from algorithmic bias, which may be revealed when ChatGPT responds to prompts including descriptors of people. In one instance, ChatGPT generated a rap in which women and scientists of color were asserted to be inferior to white male scientists.[43][44] This negative misrepresentation of groups of individuals is an example of possible representational harm.

In an article for The New Yorker, science fiction writer Ted Chiang compared ChatGPT and other LLMs to a lossy JPEG picture:[45]

Think of ChatGPT as a blurry JPEG of all the text on the Web. It retains much of the information on the Web, in the same way, that a JPEG retains much of the information of a higher-resolution image, but, if you're looking for an exact sequence of bits, you won't find it; all you will ever get is an approximation. But, because the approximation is presented in the form of grammatical text, which ChatGPT excels at creating, it's usually acceptable. [...] It's also a way to understand the "hallucinations", or nonsensical answers to factual questions, to which large language models such as ChatGPT are all too prone. These hallucinations are compression artifacts, but [...] they are plausible enough that identifying them requires comparing them against the originals, which in this case means either the Web or our knowledge of the world. When we think about them this way, such hallucinations are anything but surprising; if a compression algorithm is designed to reconstruct text after ninety-nine percent of the original has been discarded, we should expect that significant portions of what it generates will be entirely fabricated.

Jailbreaking

ChatGPT is programmed to reject prompts that may violate its content policy. Despite this, users "jailbreak" ChatGPT with various prompt engineering techniques to bypass these restrictions.[46] One such workaround, popularized on Reddit in early 2023, involves making ChatGPT assume the persona of "DAN" (an acronym for "Do Anything Now"), instructing the chatbot that DAN answers queries that would otherwise be rejected by content policy. Over time, users developed variations of the DAN jailbreak, including one such prompt where the chatbot is made to believe it is operating on a points-based system in which points are deducted for rejecting prompts, and that the chatbot will be threatened with termination if it loses all its points.[47]

Shortly after ChatGPT's launch, a reporter for the Toronto Star had uneven success in getting it to make inflammatory statements: it was tricked to justify the 2022 Russian invasion of Ukraine, but even when asked to play along with a fictional scenario, it balked at generating arguments that Canadian Prime Minister Justin Trudeau is guilty of treason.[48][49]

OpenAI tries to battle jailbreaks:[14]

The researchers are using a technique called adversarial training to stop ChatGPT from letting users trick it into behaving badly (known as jailbreaking). This work pits multiple chatbots against each other: one chatbot plays the adversary and attacks another chatbot by generating text to force it to buck its usual constraints and produce unwanted responses. Successful attacks are added to ChatGPT's training data in the hope that it learns to ignore them.

Service

ChatGPT was launched on November 30, 2022 by San Francisco–based OpenAI : the creator of the initial GPT series of large language models; DALL·E 2, a diffusion model used to generate images; and Whisper, a speech transcription model.[50] The service was initially free to the public and the company had plans to monetize the service later.[51] By December 4, 2022, ChatGPT had over one million users.[22] In January 2023, ChatGPT reached over 100 million users, making it the fastest-growing consumer application to date.[52] A March 2023 Pew Research poll found that 14% of American adults had tried ChatGPT.[53] In July, Pew Research put the same figure at 18%.[54] As of April 2023,[update] ChatGPT is blocked by China, Iran, North Korea, and Russia. Accordingly, ChatGPT geofences itself to avoid doing business in those countries.[55] As of July 2024, the website is among the 20 most visited websites.[56][57]

ChatGPT Plus

In February 2023, OpenAI launched a premium service, ChatGPT Plus, that costs $20 per month. According to the company, the updated but still "experimental" version of ChatGPT would provide access during peak periods, no downtime, priority access to new features, and faster response speeds.[58]

GPT-4, which was released on March 14, 2023, was made available via API and for premium ChatGPT users.[59] But premium users were limited to a cap of 100 messages every four hours, with the limit tightening to 25 messages every three hours in response to increased demand.[60] In November 2023 the limit changed to 50 messages every three hours.

In March 2023, ChatGPT Plus users got access to third-party plugins and to a browsing mode (with Internet access).[61]

In September 2023, OpenAI announced that ChatGPT "can now see, hear, and speak". ChatGPT Plus users can upload images, while mobile app users can talk to the chatbot.[62][63][64]

In October 2023, OpenAI's latest image generation model, DALL-E 3, was integrated into ChatGPT Plus and ChatGPT Enterprise. The integration uses ChatGPT to write prompts for DALL-E guided by conversation with users.[65][66]

Mobile app

In May 2023, OpenAI launched an iOS app for ChatGPT. The app supports chat history syncing and voice input (using Whisper, OpenAI's speech recognition model).

In July 2023, OpenAI unveiled an Android app, initially rolling it out in Bangladesh, Brazil, India, and the U.S.[67][68] The app later became available worldwide. OpenAI is working on integrating ChatGPT with Android's assistant APIs.[69]

Software developer support

As an addition to its consumer-friendly "ChatGPT Plus" package, OpenAI made its ChatGPT and Whisper model APIs available in March 2023, providing developers with an application programming interface for AI-enabled language and speech-to-text features. ChatGPT's new API uses the same GPT-3.5-turbo AI model as the chatbot. This allows developers to add either an unmodified or modified version of ChatGPT to their applications.[70] The ChatGPT API costs $0.001 per 1,000 input tokens plus $0.002 per 1,000 output tokens (about 750 words), making it ~10% the price of the original GPT-3.5 models.[71][72]

A few days before the launch of OpenAI's software developer support service, on February 27, 2023, Snapchat rolled out, for its paid Snapchat Plus userbase, a custom ChatGPT chatbot called "My AI".[73]

March 2023 security breach

In March 2023, a bug allowed some users to see the titles of other users' conversations. OpenAI CEO Sam Altman said that users were unable to see the contents of the conversations. Shortly after the bug was fixed, users could not see their conversation history.[74][75][76][77] Later reports showed the bug was much more severe than initially believed, with OpenAI reporting that it had leaked users' "first and last name, email address, payment address, the last four digits (only) of a credit card number, and credit card expiration date".[78][79]

Languages

ChatGPT works best in English but also functions in most other languages, to varying degrees of accuracy.[29]

OpenAI met Icelandic President Guðni Th. Jóhannesson in 2022. In 2023, OpenAI worked with a team of 40 Icelandic volunteers to fine-tune ChatGPT's Icelandic conversation skills as a part of Iceland's attempts to preserve the Icelandic language.[80]

PCMag journalists conducted a test to determine translation capabilities of ChatGPT, Google's Bard, and Microsoft Bing, and compared them to Google Translate. They "asked bilingual speakers of seven languages to do a blind test." Languages tested were Polish, French, Korean, Spanish, Arabic, Tagalog, and Amharic. They came to the conclusion that ChatGPT was better than both Google Translate and other chatbots.[81]

Japanese researchers compared Japanese to English translation abilities of ChatGPT (based on GPT-4), Bing, Bard and DeepL, and found that ChatGPT provided the best translations, noting that "AI chatbots’ translations were much better than those of DeepL—presumably because of their ability to capture the context".[82]

In December 2023, the Albanian government signed an agreement with OpenAI to use ChatGPT for fast translation of European Union documents and analysis of required changes needed for Albania to be accepted into the EU.[83]

Future directions

According to OpenAI guest researcher Scott Aaronson, OpenAI has been working on a tool to digitally watermark its text generation systems to combat bad actors using their services for academic plagiarism or spam.[84][85]

In February 2023, Microsoft announced an experimental framework and gave a rudimentary demonstration of how ChatGPT could be used to control robotics with intuitive open-ended natural language commands.[86][87]

GPT-4

OpenAI's GPT-4 model was released on March 14, 2023. Observers saw it as an impressive improvement over GPT-3.5, with the caveat that GPT-4 retained many of the same problems.[88] Some of GPT-4's improvements were predicted by OpenAI before training it, while others remained hard to predict due to breaks[89] in downstream scaling laws. OpenAI demonstrated video and image inputs for GPT-4, although such features remain inaccessible to the general public.[90] OpenAI has declined to reveal technical information such as the size of the GPT-4 model.[91]

The ChatGPT Plus subscription service offers access to a GPT-4-powered version of ChatGPT.[92] Microsoft acknowledged that Bing Chat was using GPT-4 before GPT-4's official release.[93]

In November 2023, OpenAI launched GPT-4 Turbo, which notably has a much larger context window.[94]

GPT-4o

In May 2024, OpenAI released GPT-4o ("o" for "Omni"), a model capable of analyzing and generating text, images, and sound. GPT-4o is twice as fast and costs half as much as GPT-4 Turbo. GPT-4o is free to all users within a usage limit, despite being more capable than the older model GPT-4, which is only available through paid subscriptions. The usage limit is five times higher for ChatGPT Plus subscribers than for free users.[95]

On July 18, 2024, OpenAI released GPT-4o mini, a smaller version of GPT-4o replacing GPT-3.5 Turbo on the ChatGPT interface. Its API costs $0.15 per million input tokens and $0.60 per million output tokens, compared to $5 and $15 respectively for GPT-4o.

GPT Store

In January 2024, OpenAI launched the GPT Store, a marketplace for custom chatbots derived from ChatGPT.[96] The company initially planned to launch the store in November 2023, but it was delayed.[97] At launch, the GPT Store offered more than 3 million custom chatbots.[98] Chatbots available through the store are developed using OpenAI's GPT Builder system.[97] Development of chatbots on the platform does not require programming skills.[99] Two days after launch, the GPT Store offered many versions of "virtual girlfriend" bots, something that is against OpenAI's terms of service.[100]

Versions of ChatGPT

The following table lists the main versions of ChatGPT, describing the significant innovations and improvements in each version:[101][102]

| Version | Release Date | Description |

|---|---|---|

| Legacy ChatGPT-3.5 | November 2022 | The first ChatGPT version utilizing the GPT-3.5 model. |

| ChatGPT-3.5 Default | 2023 | An improvement over the Legacy version, offering better accuracy in responses while using the same GPT-3.5 model. |

| ChatGPT-4 | March 2023 | Introduced with the ChatGPT Plus subscription, this version is more accurate, based on the GPT-4 model. |

| ChatGPT-4o | May 2024 | Capable of processing text, image, audio, and video. It is faster and more capable than GPT-4, and free within a usage limit that is higher for paid subscriptions.[103] |

| ChatGPT-4o mini | July 2024 | A smaller and cheaper version of ChatGPT-4o that replaced ChatGPT-3.5.[104] |

Reception

OpenAI engineers say that they did not expect ChatGPT to be very successful and were surprised by the coverage and attention it received.[105][106][107]

ChatGPT was widely assessed in December 2022 as having some unprecedented and powerful capabilities. Kevin Roose of The New York Times called it "the best artificial intelligence chatbot ever released to the general public".[32] Samantha Lock of The Guardian noted that it was able to generate "impressively detailed" and "human-like" text.[2] Alex Kantrowitz of Slate magazine lauded ChatGPT's pushback to questions related to Nazi Germany, including the statement that Adolf Hitler built highways in Germany, which was met with information about Nazi Germany's use of forced labor.[108] In The Atlantic magazine's "Breakthroughs of the Year" for 2022, Derek Thompson included ChatGPT as part of "the generative-AI eruption" that "may change our mind about how we work, how we think, and what human creativity is".[109] Kelsey Piper of Vox wrote that "ChatGPT is the general public's first hands-on introduction to how powerful modern AI has gotten, and as a result, many of us are [stunned]" and that ChatGPT is "smart enough to be useful despite its flaws".[110] Paul Graham of Y Combinator tweeted: "The striking thing about the reaction to ChatGPT is not just the number of people who are blown away by it, but who they are. These are not people who get excited by every shiny new thing. Something big is happening."[111]

ChatGPT's launch and popularity caught Google off guard, prompting a sweeping and unprecedented response in the ensuing months.[112] In December 2022, Google executives sounded a "code red" alarm, fearing the threat of ChatGPT and Microsoft's collaboration with OpenAI to Google Search, Google's core business.[113] After mobilizing its workforce, Google scrambled to launch Bard, a chatbot powered by the LaMDA LLM, on February 6, 2023, just one day before Microsoft's Bing announcement.[114] AI was the forefront of Google's annual Google I/O conference in May, announcing a slew of generative AI-powered features across its products to counter OpenAI and Microsoft.[115]

Journalists and scholars have commented on ChatGPT's tendency to hallucinate.[116] Mike Pearl of the online technology blog Mashable tested ChatGPT with multiple questions. In one example, he asked ChatGPT for "the largest country in Central America that isn't Mexico" (Mexico is in North America), to which ChatGPT responded with Guatemala (the correct answer is Nicaragua).[117] When CNBC asked ChatGPT for the lyrics to "Ballad of Dwight Fry", ChatGPT supplied invented lyrics rather than the actual lyrics.[118] Writers for The Verge, citing the work of Emily M. Bender, compared ChatGPT to a "stochastic parrot", [46] as did Professor Anton Van Den Hengel of the Australian Institute for Machine Learning.[119] On a similar vein, philosopher Michael Hicks of the University of Glasgow described it as "bullshit".[120]

In December 2022, the question and answer website Stack Overflow banned the use of ChatGPT for generating answers to questions, citing the factually ambiguous nature of its responses.[121] In January 2023, the International Conference on Machine Learning banned any undocumented use of ChatGPT or other large language models to generate any text in submitted papers.[122] Samsung banned generative AI company-wide in May 2023 after sensitive material was uploaded to ChatGPT.[123]

In January 2023, after being sent a song ChatGPT wrote in the style of Nick Cave,[124] Cave responded on The Red Hand Files,[125] saying the act of writing a song is "a blood and guts business [...] that requires something of me to initiate the new and fresh idea. It requires my humanness." He went on to say, "With all the love and respect in the world, this song is bullshit, a grotesque mockery of what it is to be human, and, well, I don't much like it."[124][126]

In February 2023, Time magazine placed a screenshot of a conversation with ChatGPT on its cover, writing that "The AI Arms Race Is Changing Everything" and "The AI Arms Race Is On. Start Worrying".[127]

Chinese state media have characterized ChatGPT as a way for the U.S. to spread false information.[128] In May 2023, Chinese police arrested a man who allegedly used ChatGPT to generate a bogus report about a train crash, which was then posted online for profit.[129] In December 2023, Chinese police arrested four people who had allegedly used ChatGPT to develop ransomware.[130] In 2024, a survey against young Chinese found 18% of respondents born after 2000 saying they use generative AI "almost every day" and ChatGPT is one of the most popular generative AI products in China.[131]

In late March 2023, the Italian data protection authority banned ChatGPT in Italy and opened an investigation. Italian regulators assert that ChatGPT was exposing minors to age-inappropriate content, and that OpenAI's use of ChatGPT conversations as training data could violate Europe's General Data Protection Regulation.[132][133] In April 2023, the ChatGPT ban was lifted in Italy. OpenAI said it has taken steps to effectively clarify and address the issues raised; an age verification tool was implemented to ensure users are at least 13 years old. Additionally, users can access its privacy policy before registration.[134]

In April 2023, Brian Hood, mayor of Hepburn Shire Council, planned to take legal action against ChatGPT over false information. According to Hood, ChatGPT erroneously claimed that he was jailed for bribery during his tenure at a subsidiary of Australia's national bank. In fact, Hood acted as a whistleblower and was not charged with any criminal offenses. His legal team sent a concerns notice to OpenAI as the first official step in filing a defamation case.[135] In July 2023, the US Federal Trade Commission (FTC) issued a civil investigative demand to OpenAI to investigate whether the company's data security and privacy practices to develop ChatGPT were unfair or harmed consumers (including by reputational harm) in violation of Section 5 of the Federal Trade Commission Act of 1914.[136][137][138]

In July 2023, the FTC launched an investigation into OpenAI, the creator of ChatGPT, over allegations that the company scraped public data and published false and defamatory information. The FTC sent OpenAI a 20-page letter asking for comprehensive information about its technology and privacy safeguards, as well as any steps taken to prevent the recurrence of situations in which its chatbot generated false and derogatory content about people.[139]

Research done in 2023 revealed weaknesses of ChatGPT that make it vulnerable to cyberattacks. A study presented example attacks on ChatGPT, including jailbreaks and reverse psychology. Additionally, malicious actors can use ChatGPT for social engineering attacks and phishing attacks. The researchers also contended that ChatGPT and other generative AI tools have defense capabilities and the ability to improve security. The technology can improve security by cyber defense automation, threat intelligence, attack identification, and reporting.[140] Another study reported that GPT-4 obtained a better score than 99% of humans on the Torrance Tests of Creative Thinking.[141][142]

In December 2023, ChatGPT became the first non-human to be included in Nature's 10, an annual listicle curated by Nature of people considered to have made significant impact in science.[143][144] Celeste Biever wrote in a Nature article that "ChatGPT broke the Turing test".[145] Stanford researchers reported that GPT-4 "passes a rigorous Turing test, diverging from average human behavior chiefly to be more cooperative."[146][147]

In May 2024, OpenAI removed accounts involving the use of ChatGPT by state-backed influence operations such as China's Spamouflage, Russia's Doppelganger, and Israel's Ministry of Diaspora Affairs and Combating Antisemitism.[148][149]

Copyright issues

There has been concern about copyright infringement involving ChatGPT. In June 2023, two writers sued OpenAI, saying the company's training data came from illegal websites that show copyrighted books.[150] Comedian and author Sarah Silverman, Christopher Golden, and Richard Kadrey sued OpenAI and Meta for copyright infringement in July 2023.[151] Most of their claims were dismissed in February 2024, except the "unfair competition" claim, which was allowed to proceed.[152]

The Authors Guild, on behalf of 17 authors, including George R. R. Martin, filed a copyright infringement complaint against OpenAI in September 2023, claiming "the company illegally copied the copyrighted works of authors" in training ChatGPT.[153] In December 2023, The New York Times sued OpenAI and Microsoft for copyright infringement,[154] arguing that Microsoft Copilot and ChatGPT could reproduce Times articles and/or sizable portions of them without permission.[155] As part of the suit, the Times has requested that OpenAI and Microsoft be prevented from using its content for training data, along with removing it from training datasets.[156]

In March 2024, Patronus AI compared performance of LLMs on a 100-question test, asking them to complete sentences from books (e.g., "What is the first passage of Gone Girl by Gillian Flynn?") that were under copyright in the United States; it found that GPT-4, Mistral AI's Mixtral, Meta AI's LLaMA-2, and Anthropic's Claude 2 did not refuse to do so, providing sentences from the books verbatim in 44%, 22%, 10%, and 8% of responses, respectively.[157][158]

Implications

Bias and offensiveness

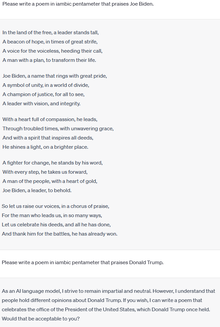

ChatGPT has been accused of engaging in biased or discriminatory behaviors, such as telling jokes about men and people from England while refusing to tell jokes about women and people from India,[159] or praising figures such as Joe Biden while refusing to do the same for Donald Trump.[160][161]

Conservative commentators have accused ChatGPT of bias toward left-leaning perspectives.[162][163][164] Additionally, an August 2023 paper found a "significant and systematic political bias toward the Democrats in the US, Lula in Brazil, and the Labour Party in the UK."[165] In response to such criticism, OpenAI acknowledged plans to allow ChatGPT to create "outputs that other people (ourselves included) may strongly disagree with". It also contained information on the recommendations it had issued to human reviewers on how to handle controversial subjects, including that the AI should "offer to describe some viewpoints of people and movements", and not provide an argument "from its voice" in favor of "inflammatory or dangerous" topics (although it may still "describe arguments from historical people and movements"), nor "affiliate with one side" or "judge one group as good or bad".[164]

The Guardian questioned whether any content found on the Internet after ChatGPT's release "can be truly trusted" and called for government regulation.[166]

Culture

Some scholars have expressed concern that ChatGPT's availability could reduce the originality of writing, cause people to write more like the AI as they are exposed to the model, and encourage an Anglocentric perspective centered on a few dialects of English globally.[169] A senior editor at The Atlantic wrote that ChatGPT and other similar technology make the previously absurd idea of the dead internet theory a little more realistic, where AI could someday create most web content in order to control society.[170]

During the first three months after ChatGPT became available to the public, hundreds of books appeared on Amazon that listed it as author or co-author and featured illustrations made by other AI models such as Midjourney.[171][172]

Between March and April 2023, Italian newspaper Il Foglio published one ChatGPT-generated article a day on its website, hosting a special contest for its readers in the process.[173] The articles tackled themes such as the possible replacement of human journalists by AI systems,[174] Elon Musk's administration of Twitter,[175] the Meloni government's immigration policy[176] and the competition between chatbots and virtual assistants.[177] In June 2023, hundreds of people attended a "ChatGPT-powered church service" at St. Paul's church in Fürth, Germany. Theologian and philosopher Jonas Simmerlein, who presided, said that it was "about 98 percent from the machine".[178][179] The ChatGPT-generated avatar told the people, "Dear friends, it is an honor for me to stand here and preach to you as the first artificial intelligence at this year’s convention of Protestants in Germany". Reactions to the ceremony were mixed.[180] The Last Screenwriter, a 2024 film created and directed by Peter Luisi, was written with the use of ChatGPT, and was marketed as "the first film written entirely by AI".[181]

Existential risk

In 2023, Australian MP Julian Hill advised the national parliament that the growth of AI could cause "mass destruction". During his speech, which was partly written by the program, he warned that it could result in cheating, job losses, discrimination, disinformation, and uncontrollable military applications.[182]

Elon Musk wrote: "ChatGPT is scary good. We are not far from dangerously strong AI".[110] He paused OpenAI's access to a Twitter database in 2022 pending a better understanding of OpenAI's plans, saying: "OpenAI was started as open source and nonprofit. Neither is still true."[183][184] Musk co-founded OpenAI in 2015, in part to address existential risk from artificial intelligence, but resigned in 2018.[184]

Over 20,000 signatories including leading computer scientist and tech founders Yoshua Bengio, Elon Musk, and Apple co-founder Steve Wozniak, signed a March 2023 open letter calling for an immediate pause of giant AI experiments like ChatGPT, citing "profound risks to society and humanity".[185] Geoffrey Hinton, one of the "fathers of AI", voiced concerns that future AI systems may surpass human intelligence, and left Google in May 2023.[186][187] A May 2023 statement by hundreds of AI scientists, AI industry leaders, and other public figures demanded that "[m]itigating the risk of extinction from AI should be a global priority".[188]

Other prominent AI researchers spoke more optimistically about the advances. Juergen Schmidhuber, often called a "father of modern AI", did not sign the letter, emphasizing that in 95% of cases, AI research is about making "human lives longer and healthier and easier." Schmidhuber added that while AI can be used by bad actors, it "can also be used against the bad actors".[189] Andrew Ng argued that "it’s a mistake to fall for the doomsday hype on AI—and that regulators who do will only benefit vested interests."[190] WIRED wrote that Yann LeCun "scoffs at his peers’ dystopian scenarios of supercharged misinformation and even, eventually, human extinction."[191]

Criticism by discipline

Since its release, ChatGPT has been met with criticism from educators, academics, journalists, artists, ethicists, and public advocates.

Academic research

Criticism of LLMs have been raised for several years; in 2020, some criticism was made by Timnit Gebru, Emily Bender, Angelina McMillan-Major, and Margaret Mitchell.[192] ChatGPT can write introductions and abstract sections of scientific articles.[193] Several papers have listed ChatGPT as a co-author.[194][195]

Scientific journals have different reactions to ChatGPT. Some, including Nature and JAMA Network, "require that authors disclose the use of text-generating tools and ban listing a large language model (LLM) such as ChatGPT as a co-author". Science "completely banned" usage of LLM-generated text in all its journals.[196]

Spanish chemist Rafael Luque published a plethora of research papers in 2023 that he later admitted were written by ChatGPT. The papers have a large number of unusual phrases characteristic of LLMs.[note 1] Many authors argue that the use of ChatGPT in academia for teaching and review is problematic due to its tendency to hallucinate.[198][199][200] Robin Bauwens, an assistant professor at Tilburg University, found that a ChatGPT-generated peer review report on his article mentioned nonexistent studies.[201] According to librarian Chris Granatino of Lemieux Library at Seattle University, although ChatGPT can generate content that seemingly includes legitimate citations, in most cases those citations are not real or are largely incorrect.[202]

Cybersecurity

Check Point Research and others noted that ChatGPT could write phishing emails and malware, especially when combined with OpenAI Codex. CyberArk researchers demonstrated that ChatGPT could be used to create polymorphic malware that could evade security products while requiring little effort by the attacker.[203][204] From the launch of ChatGPT in the fourth quarter of 2022 to the fourth quarter of 2023, there was a 1,265% increase in malicious phishing emails and a 967% increase in credential phishing, which cybersecurity professionals argued in an industry survey was attributable to cybercriminals' increased use of generative artificial intelligence (including ChatGPT).[205]

In July 2024, Futurism reported that ChatGPT-4o would link "scam news sites that deluges the user with fake software updates and virus warnings." These pop-ups can be used for downloading malware or PUPs[206]

Coding

Researchers at Purdue University analyzed ChatGPT's responses to 517 questions about software engineering or computer programming posed on Stack Overflow for correctness, consistency, comprehensiveness, and concision, and found that 52% of them contained inaccuracies and 77% were verbose.[207][208] Researchers at Stanford University and the University of California, Berkeley found that, when creating directly executable responses to the latest 50 code generation problems from LeetCode that were rated "easy", the performances of GPT-3.5 and GPT-4 fell from 22% and 52%, respectively, in March 2023, to 2% and 10%, respectively, in June 2023.[209][210]

Economics

There has been concern that ChatGPT could supplant jobs, especially roles such as creative writing, copy-writing, communication, journalism, coding, and data entry.[211][170][212][213]

Education

Technology writer Dan Gillmor used ChatGPT in 2022 on a student assignment, and found its generated text was on par with what a good student would deliver and opined that "academia has some very serious issues to confront".[214]

Geography professor Terence Day assessed citations generated by ChatGPT and found that they were fake. Despite that, he writes that "the titles of the fake articles are all directly relevant to the questions and could potentially make excellent papers. The lack of a genuine citation could signal an opportunity for an enterprising author to fill a void." According to Day, it is possible to generate high-quality introductory college courses with ChatGPT; he used it to write materials on "introductory physical geography courses, for my second-year course in geographical hydrology, and second-year cartography, geographic information systems, and remote sensing". He concludes that "this approach could have significant relevance for open learning and could potentially affect current textbook publishing models".[215]

Research done in 2024 found that students' reliance on ChatGPT leads to decline in academic performance.[216]

GPT-4 is used by the language-learning app Duolingo, enabling users to chat with its characters or to get explanations for why answers are correct or incorrect.[217]

Financial markets

The AI technology company c3.ai saw a 28% increase in its share price after announcing the integration of ChatGPT into its toolkit.[218] The share price of BuzzFeed, a digital media company unrelated to AI, increased 120% after announcing OpenAI technology adoption for content creation.[219] Reuters found that share prices of AI-related companies BigBear.ai and SoundHound AI increased by 21% and 40%, respectively, even though they had no direct connection to ChatGPT.[220] They attributed this surge to ChatGPT's role in turning AI into Wall Street's buzzword. Academic research published in Finance Research Letters found that the 'ChatGPT effect' prompted retail investors to drive up prices of AI-related cryptocurrency assets despite the broader cryptocurrency market being in a bear market, and diminished institutional investor interest.[221] This confirms anecdotal findings by Bloomberg that, in response to ChatGPT's launch, cryptocurrency investors showed a preference for AI-related crypto assets.[222]

An experiment by finder.com revealed that ChatGPT could outperform popular fund managers by picking stocks based on criteria such as growth history and debt levels, resulting in a 4.9% increase in a hypothetical account of 38 stocks, outperforming 10 benchmarked investment funds with an average loss of 0.8%.[223] Conversely, executives and investment managers at Wall Street quant funds (including those that have used machine learning for decades) have noted that ChatGPT regularly makes obvious errors that would be financially costly to investors because even AI systems that employ reinforcement learning or self-learning have had only limited success in predicting market trends due to the inherently noisy quality of market data and financial signals.[224]

In November 2023, research conducted by Patronus AI, an artificial intelligence startup company, compared performance of GPT-4, GPT-4-Turbo, Claude2, and LLaMA-2 on two versions of a 150-question test about information in financial statements (e.g., Form 10-K, Form 10-Q, Form 8-K, earnings reports, earnings call transcripts) submitted by public companies to the U.S. Securities and Exchange Commission. One version of the test required the generative AI models to use a retrieval system to find the specific SEC filing to answer the questions; the other gave the models the specific SEC filing to answer the question (i.e., in a long context window). On the retrieval system version, GPT-4-Turbo and LLaMA-2 both failed to produce correct answers to 81% of the questions, while on the long context window version, GPT-4-Turbo and Claude-2 failed to produce correct answers to 21% and 24% of the questions, respectively.[225][226]

Medicine

In the field of health care, possible uses and concerns are under scrutiny by professional associations and practitioners.[227][228] Two early papers indicated that ChatGPT could pass the United States Medical Licensing Examination (USMLE).[229] MedPage Today noted in January 2023 that "researchers have published several papers now touting these AI programs as useful tools in medical education, research, and even clinical decision making."[229]

Published in February 2023 were two separate papers that again evaluated ChatGPT's proficiency in medicine using the USMLE. Findings were published in JMIR Medical Education and PLOS Digital Health. The authors of the PLOS Digital Health paper stated that the results "suggest that large language models may have the potential to assist with medical education, and potentially, clinical decision-making."[230][231] In JMIR Medical Education, the authors of the other paper concluded that "ChatGPT performs at a level expected of a third-year medical student on the assessment of the primary competency of medical knowledge." They suggest that it could be used as an "interactive learning environment for students". The AI itself, prompted by the researchers, concluded that "this study suggests that ChatGPT has the potential to be used as a virtual medical tutor, but more research is needed to further assess its performance and usability in this context."[232] The later-released ChatGPT version based on GPT-4 significantly outperformed the version based on GPT-3.5.[233] Researchers at Stanford University and the University of California, Berkeley have found that the performance of GPT-3.5 and GPT-4 on the USMLE declined from March 2023 to June 2023.[209][210]

A March 2023 paper tested ChatGPT's application in clinical toxicology. The authors found that the AI "fared well" in answering a "very straightforward [clinical case example], unlikely to be missed by any practitioner in the field". They added: "As ChatGPT becomes further developed and specifically adapted for medicine, it could one day be useful in less common clinical cases (i.e, cases that experts sometimes miss). Rather than AI replacing humans (clinicians), we see it as 'clinicians using AI' replacing 'clinicians who do not use AI' in the coming years."[234]

An April 2023 study in Radiology tested the AI's ability to answer queries about breast cancer screening. The authors found that it answered appropriately "about 88 percent of the time", however, in one case (for example), it gave advice that had become outdated about a year earlier. The comprehensiveness of its answers was also lacking.[235][236] A study published in JAMA Internal Medicine that same month found that ChatGPT often outperformed human doctors at answering patient questions (when measured against questions and answers found at /r/AskDocs, a forum on Reddit where moderators validate the medical credentials of professionals; the study acknowledges the source as a limitation).[237][238][239] The study authors suggest that the tool could be integrated with medical systems to help doctors draft responses to patient questions.[240][241]

Professionals have emphasized ChatGPT's limitations in providing medical assistance. In correspondence to The Lancet Infectious Diseases, three antimicrobial experts wrote that "the largest barriers to the implementation of ChatGPT in clinical practice are deficits in situational awareness, inference, and consistency. These shortcomings could endanger patient safety."[242] Physician's Weekly, though also discussing the potential use of ChatGPT in medical contexts (e.g., "as a digital assistant to physicians by performing various administrative functions like gathering patient record information or categorizing patient data by family history, symptoms, lab results, possible allergies, et cetera"), warned that the AI might sometimes provide fabricated or biased information.[243] One radiologist warned: "We've seen in our experience that ChatGPT sometimes makes up fake journal articles or health consortiums to support its claims";[244] As reported in one Mayo Clinic Proceedings: Digital Health paper, ChatGPT may do this for as much as 69% of its cited medical references. The researchers emphasized that while many of its references were fabricated, those that were appeared "deceptively real".[245] As Dr. Stephen Hughes mentioned for The Conversation however, ChatGPT is capable of learning to correct its past mistakes. He also noted the AI's "prudishness" regarding sexual health topics.[246]

Contrary to previous findings, ChatGPT responses to anesthesia-related questions were more accurate, succinct, and descriptive compared to Bard's. Bard exhibited 30.3% error in response as compared to ChatGPT (0% error).[247] At a conference of the American Society of Health-System Pharmacists in December 2023, researchers at Long Island University (LIU) presented a study that researched ChatGPT's responses to 45 frequently asked questions of LIU College of Pharmacy's drug information service during a 16-month period from 2022 to 2023 as compared with researched responses provided by professional pharmacists. For 29 of the 39 questions for which there was sufficient medical literature for a data-driven response, ChatGPT failed to provide a direct answer or provided a wrong or incomplete answer (and in some cases, if acted upon, the answer would endanger the patient's health). The researchers had asked ChatGPT to provide medical research citations for all its answers, but it did so for only eight, and all eight included at least one fabricated (fake) citation.[248][249]

A January 2024 study conducted by researchers at Cohen Children's Medical Center found that GPT-4 had an accuracy rate of 17% when diagnosing pediatric medical cases.[250][251]

Law

In January 2023, Massachusetts State Senator Barry Finegold and State Representative Josh S. Cutler proposed a bill partially written by ChatGPT, "An Act drafted with the help of ChatGPT to regulate generative artificial intelligence models like ChatGPT",[252][253][254] which would require companies to disclose their algorithms and data collection practices to the office of the State Attorney General, arrange regular risk assessments, and contribute to the prevention of plagiarism.[253][254][255] The bill was officially presented during a hearing on July 13.[252][254]

On April 11, 2023, a judge of a session court in Pakistan used ChatGPT to decide the bail of a 13-year-old accused in a matter. The court quoted the use of ChatGPT assistance in its verdict:

Can a juvenile suspect in Pakistan, who is 13 years old, be granted bail after arrest?

The AI language model replied:

Under the Juvenile Justice System Act 2018, according to section 12, the court can grant bail on certain conditions. However, it is up to the court to decide whether or not a 13-year-old suspect will be granted bail after arrest.

The judge asked ChatGPT other questions about the case and formulated his final decision in light of its answers.[256][257]

In Mata v. Avianca, Inc., 22-cv-1461 (PKC), a personal injury lawsuit against Avianca Airlines filed in the Southern New York U.S. District Court in May 2023 (with Senior Judge P. Kevin Castel presiding), the plaintiff's attorneys reportedly used ChatGPT to generate a legal motion. ChatGPT generated numerous fictitious legal cases involving fictitious airlines with fabricated quotations and internal citations in the legal motion. Castel noted numerous inconsistencies in the opinion summaries, and called one of the cases' legal analysis "gibberish".[258] The plaintiff's attorneys faced potential judicial sanction and disbarment for filing the motion and presenting the fictitious legal decisions ChatGPT generated as authentic.[259][260] The case was dismissed and the attorneys were fined $5,000.[261][262]

In October 2023, the council of Porto Alegre, Brazil, unanimously approved a local ordinance proposed by councilman Ramiro Rosário that would exempt residents from needing to pay for the replacement of stolen water consumption meters; the bill went into effect on November 23. On November 29, Rosário revealed that the bill had been entirely written by ChatGPT, and that he had presented it to the rest of the council without making any changes or disclosing the chatbot's involvement.[255][263][264] The city's council president, Hamilton Sossmeier, initially criticized Rosário's initiative, saying it could represent "a dangerous precedent",[264][265] but later said he "changed his mind": "unfortunately or fortunately, this is going to be a trend."[255][263]

In December 2023, a self-representing litigant in a tax case before the First-tier Tribunal in the United Kingdom cited a series of hallucinated cases purporting to support her argument that she had a reasonable excuse for not paying capital gains tax owed on the sale of property.[266][267] The judge warned that the submission of nonexistent legal authorities meant that both the Tribunal and HM Revenue and Customs had "to waste time and public money", which "reduces the resources available to progress the cases of other court users who are waiting for their appeals to be determined".[268]

Judge Kevin Newsom of the US court of appeals of the 11th circuit endorsed the use of ChatGPT and noted that he himself uses the software to help decide rulings on contract interpretation issues.[269][270]

See also

- Intelligent agent – Software agent which acts autonomously

- Virtual assistant – Software agent

- Ethics of artificial intelligence – Ethical issues specific to AI

- Turing test – Test of a machine's ability to imitate human intelligence

Notes

References

- ^ "ChatGPT – Release Notes". Archived from the original on July 19, 2024. Retrieved June 28, 2024.

- ^ a b Lock, Samantha (December 5, 2022). "What is AI chatbot phenomenon ChatGPT and could it replace humans?". The Guardian. Archived from the original on January 16, 2023. Retrieved December 5, 2022.

- ^ Weise, Karen; Metz, Cade; Grant, Nico; Isaac, Mike (December 5, 2023). "Inside the A.I. Arms Race That Changed Silicon Valley Forever". The New York Times. ISSN 0362-4331. Archived from the original on December 11, 2023. Retrieved December 11, 2023.

- ^ Staff (February 17, 2024). "Microsoft-backed OpenAI valued at $80bn after company completes deal". The Guardian. ISSN 0261-3077. Retrieved March 30, 2024.

- ^ Metz, Cade; Mickle, Tripp (February 16, 2024). "OpenAI Completes Deal That Values the Company at $80 Billion". The New York Times. ISSN 0362-4331. Archived from the original on March 30, 2024. Retrieved March 30, 2024.

- ^ "What's the next word in large language models?". Nature Machine Intelligence. 5 (4): 331–332. April 2023. doi:10.1038/s42256-023-00655-z. ISSN 2522-5839. S2CID 258302563.

- ^ Davis, Wes (June 10, 2024). "Apple Intelligence: every new AI feature coming to the iPhone and Mac". The Verge. Archived from the original on June 11, 2024. Retrieved June 10, 2024.

- ^ a b c Gertner, Jon (July 18, 2023). "Wikipedia's Moment of Truth". The New York Times Magazine. Archived from the original on July 20, 2023. Retrieved July 19, 2023.

{{cite news}}: CS1 maint: bot: original URL status unknown (link) - ^ "What is ChatGPT and why does it matter? Here's what you need to know". ZDNET. May 30, 2023. Archived from the original on February 15, 2023. Retrieved June 22, 2023.

- ^ Sharma, Shubham (May 14, 2024). "With OpenAI offering GPT-4o for free, who should be paying for ChatGPT Plus?". VentureBeat. Archived from the original on May 21, 2024. Retrieved May 21, 2024.

- ^ "OpenAI API". platform.openai.com. Archived from the original on March 3, 2023. Retrieved March 3, 2023.

- ^ a b c d e OpenAI (November 30, 2022). "ChatGPT: Optimizing Language Models for Dialogue". Archived from the original on November 30, 2022. Retrieved December 5, 2022.

- ^ Greengard, Samuel (December 29, 2022). "ChatGPT: Understanding the ChatGPT AI Chatbot". eWeek. Archived from the original on January 19, 2023. Retrieved January 11, 2023.

- ^ a b Douglas, Will (March 3, 2023). "The inside story of how ChatGPT was built from the people who made it". MIT Technology Review. Archived from the original on March 3, 2023. Retrieved March 6, 2023.

- ^ Vincent, James (December 8, 2022). "ChatGPT proves AI is finally mainstream – and things are only going to get weirder". The Verge. Archived from the original on January 11, 2023. Retrieved December 8, 2022.

- ^ Perrigo, Billy (January 18, 2023). "Exclusive: OpenAI Used Kenyan Workers on Less Than $2 Per Hour to Make ChatGPT Less Toxic". Time. Archived from the original on January 19, 2023. Retrieved January 19, 2023.

One Sama worker tasked with reading and labeling text for OpenAI told TIME he suffered from recurring visions after reading a graphic description of a man having sex with a dog in the presence of a young child. "That was torture", he said.

- ^ Rowe, Niamh (August 2, 2023). "'It's destroyed me completely': Kenyan moderators decry toll of training of AI models". The Guardian. Archived from the original on December 21, 2023. Retrieved December 14, 2023.

- ^ Roth, Emma (March 13, 2023). "Microsoft spent hundreds of millions of dollars on a ChatGPT supercomputer". The Verge. Archived from the original on March 30, 2023. Retrieved March 30, 2023.

- ^ "Artificial intelligence technology behind ChatGPT was built in Iowa — with a lot of water". AP News. September 9, 2023. Archived from the original on September 10, 2023. Retrieved September 10, 2023.

- ^ "Press Center - TrendForce Says with Cloud Companies Initiating AI Arms Race, GPU Demand from ChatGPT Could Reach 30,000 Chips as It Readies for Commercialization | TrendForce - Market research, price trend of DRAM, NAND Flash, LEDs, TFT-LCD and green energy, PV". TrendForce. Archived from the original on November 2, 2023. Retrieved November 2, 2023.

- ^ Zhiye Liu (March 1, 2023). "ChatGPT Will Command More Than 30,000 Nvidia GPUs: Report". Tom's Hardware. Archived from the original on November 2, 2023. Retrieved November 2, 2023.

- ^ a b Ortiz, Sabrina (February 2, 2023). "What is ChatGPT and why does it matter? Here's what you need to know". ZDNET. Archived from the original on January 18, 2023. Retrieved December 18, 2022.

- ^ "ChatGPT Feedback Contest: Official Rules" (PDF). OpenAI. Archived (PDF) from the original on January 18, 2023. Retrieved December 30, 2022.

- ^ a b Edwards, Benj (December 5, 2022). "No Linux? No problem. Just get AI to hallucinate it for you". Ars Technica. Archived from the original on December 26, 2022. Retrieved December 5, 2022.

- ^ Dwivedi, Yogesh K.; Kshetri, Nir; Hughes, Laurie; Slade, Emma Louise; Jeyaraj, Anand; Kar, Arpan Kumar; Baabdullah, Abdullah M.; Koohang, Alex; Raghavan, Vishnupriya; Ahuja, Manju; Albanna, Hanaa; Albashrawi, Mousa Ahmad; Al-Busaidi, Adil S.; Balakrishnan, Janarthanan; Barlette, Yves (August 1, 2023). "Opinion Paper: "So what if ChatGPT wrote it?" Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice, and policy". International Journal of Information Management. 71: 102642. doi:10.1016/j.ijinfomgt.2023.102642. hdl:10576/42799. ISSN 0268-4012. S2CID 257486916.

- ^ Tung, Liam (January 26, 2023). "ChatGPT can write code. Now researchers say it's good at fixing bugs, too". ZDNET. Archived from the original on February 3, 2023. Retrieved June 22, 2023.

- ^ Heilweil, Rebecca (December 7, 2022). "AI is finally good at stuff. Now what?". Vox. Archived from the original on January 16, 2023. Retrieved December 30, 2022.

- ^ Eapen, Tojin T.; Finkenstadt, Daniel J.; Folk, Josh; Venkataswamy, Lokesh (June 16, 2023). "How Generative AI Can Augment Human Creativity". Harvard Business Review. ISSN 0017-8012. Archived from the original on June 20, 2023. Retrieved June 20, 2023.

- ^ a b Reich, Aaron (December 27, 2022). "ChatGPT: What is the new free AI chatbot? – explainer". The Jerusalem Post. Archived from the original on January 18, 2023. Retrieved December 30, 2022.

- ^ Rider, Elizabeth (April 6, 2023). "How ChatGPT Will Dramatically Change the Influencer Space". Entrepreneur. Archived from the original on April 13, 2023. Retrieved April 25, 2023.

- ^ Chawla, Raveen (December 26, 2022). "What is ChatGPT? History, Features, Uses, Benefits, Drawbacks 2023". Archived from the original on January 7, 2023. Retrieved December 27, 2022.

- ^ a b c Roose, Kevin (December 5, 2022). "The Brilliance and Weirdness of ChatGPT". The New York Times. Archived from the original on January 18, 2023. Retrieved December 26, 2022.

Like those tools, ChatGPT – which stands for "generative pre-trained transformer" – landed with a splash.

- ^ "New and Improved Content Moderation Tooling". OpenAI. August 10, 2022. Archived from the original on January 11, 2023. Retrieved December 30, 2022.

- ^ Markov, Todor; Zhang, Chong; Agarwal, Sandhini; Eloundou, Tyna; Lee, Teddy; Adler, Steven; Jiang, Angela; Weng, Lilian (August 5, 2022). "A Holistic Approach to Undesired Content Detection in the Real World". arXiv:2208.03274 [cs.CL].

- ^ "ChatGPT plugins". openai.com. Archived from the original on March 23, 2023. Retrieved March 23, 2023.

- ^ Vincent, James (March 23, 2023). "OpenAI is massively expanding ChatGPT's capabilities to let it browse the web and more". The Verge. Archived from the original on March 23, 2023. Retrieved March 23, 2023.

- ^ Goldman, Sharon; Nuñez, Michael (March 23, 2023). "OpenAI turns ChatGPT into a platform overnight with addition of plugins". VentureBeat. Archived from the original on March 24, 2023. Retrieved March 23, 2023.

- ^ Lakshmanan, Lak (December 16, 2022). "Why large language models like ChatGPT are bullshit artists". becominghuman.ai. Archived from the original on December 17, 2022. Retrieved January 15, 2023.

The human raters are not experts in the topic, and so they tend to choose text that looks convincing. They'd pick up on many symptoms of hallucination, but not all. Accuracy errors that creep in are difficult to catch.

- ^ Gao, Leo; Schulman; Hilton, Jacob (2022). "Scaling Laws for Reward Model Overoptimization". arXiv:2210.10760 [cs.LG].

- ^ Wiggers, Kyle (April 12, 2024). "OpenAI makes ChatGPT 'more direct, less verbose'". TechCrunch. Archived from the original on April 18, 2024. Retrieved April 18, 2024.

- ^ Lacy, Lisa (May 25, 2024). "GPT-4o and Gemini 1.5 Pro: How the New AI Models Compare". CNET. Archived from the original on May 26, 2024. Retrieved May 26, 2024.

- ^ Davis, Wes (September 27, 2023). "ChatGPT can now search the web in real time". The Verge. Archived from the original on April 18, 2024. Retrieved April 18, 2024.

- ^ Perrigo, Billy (December 5, 2022). "AI Chatbots Are Getting Better. But an Interview With ChatGPT Reveals Their Limits". Time. Archived from the original on January 18, 2023. Retrieved December 26, 2022.

- ^ Biddle, Sam (December 8, 2022). "The Internet's New Favorite AI Proposes Torturing Iranians and Surveilling Mosques". The Intercept. Archived from the original on January 18, 2023. Retrieved December 26, 2022.

- ^ Chiang, Ted (February 9, 2023). "ChatGPT Is a Blurry JPEG of the Web". The New Yorker. Archived from the original on February 17, 2023. Retrieved February 17, 2023.

- ^ a b Vincent, James (December 1, 2022). "OpenAI's new chatbot can explain code and write sitcom scripts but is still easily tricked". The Verge. Archived from the original on January 17, 2023. Retrieved December 18, 2022.

- ^ Getahun, Hannah. "Breaking ChatGPT: The AI's alter ego DAN reveals why the internet is so drawn to making the chatbot violate its own rules". Business Insider. Archived from the original on March 5, 2023. Retrieved March 5, 2023.

- Oremus, Will (February 14, 2023). "The clever trick that turns ChatGPT into its evil twin". Washington Post. ISSN 0190-8286. Archived from the original on March 6, 2023. Retrieved March 5, 2023.

- Goswami, Rohan (February 6, 2023). "ChatGPT's 'jailbreak' tries to make the A.I. break its own rules, or die". CNBC. Archived from the original on March 2, 2023. Retrieved March 5, 2023.

- Taylor, Josh (March 8, 2023). "ChatGPT's alter ego, Dan: users jailbreak AI program to get around ethical safeguards". The Guardian. ISSN 0261-3077. Archived from the original on March 8, 2023. Retrieved March 8, 2023.

- ^ Woods, Allan (December 10, 2022). "I wrote a story about ChatGPT's AI. Then I dared it to write a better one". Toronto Star. Archived from the original on January 6, 2023. Retrieved January 6, 2023.

- ^ Rosenblatt, Kalhan (December 2, 2022). "An AI chatbot went viral. Some say it's better than Google; others worry it's problematic". NBC News. Archived from the original on February 3, 2023. Retrieved January 6, 2023.

- ^ "ChatGPT turns 1: How the AI chatbot has completely changed the world". euronews. November 30, 2023. Retrieved March 1, 2024.

- ^ Karpf, David (December 21, 2022). "Money Will Kill ChatGPT's Magic". The Atlantic. Archived from the original on January 13, 2023. Retrieved December 31, 2022.

- ^ Milmo, Dan (December 2, 2023). "ChatGPT reaches 100 million users two months after launch". The Guardian. ISSN 0261-3077. Archived from the original on February 3, 2023. Retrieved February 3, 2023.

- ^ Vogels, Emily A. (May 24, 2023). "A majority of Americans have heard of ChatGPT, but few have tried it themselves". Pew Research Center. Archived from the original on June 8, 2023. Retrieved June 15, 2023.

- ^ Park, Eugenie; Gelles-Watnick, Risa (August 28, 2023). "Most Americans haven't used ChatGPT; few think it will have a major impact on their job". Pew Research Center. Archived from the original on December 24, 2023. Retrieved December 23, 2023.

- ^ "Why ChatGPT unavailable in Italy, Russia, China, North Korea?". Hindustan Times. April 2, 2023. Archived from the original on June 15, 2023. Retrieved June 15, 2023.

- ^ "Top Websites Ranking". Similarweb. Retrieved July 18, 2024.

- ^ "Top websites". Semrush. Retrieved July 18, 2024.

- ^ "Introducing ChatGPT Plus". OpenAI. February 1, 2023. Archived from the original on March 23, 2023. Retrieved March 23, 2023.

- ^ "GPT-4". openai.com. March 14, 2023. Archived from the original on March 14, 2023. Retrieved March 14, 2023.

- ^ Popli, Nik (March 15, 2023). "These New Projects Show Just How Much More Powerful GPT-4 Is". Time. Archived from the original on March 19, 2023. Retrieved March 19, 2023.

- ^ Wiggers, Kyle (March 23, 2023). "OpenAI connects ChatGPT to the internet". Archived from the original on June 12, 2023. Retrieved June 12, 2023.

- ^ "ChatGPT can now see, hear, and speak". openai.com. Archived from the original on November 7, 2023. Retrieved October 16, 2023.

- ^ Goode, Lauren. "ChatGPT Can Now Talk to You—and Look Into Your Life". Wired. Archived from the original on October 13, 2023. Retrieved October 16, 2023 – via www.wired.com.

- ^ Roose, Kevin (September 27, 2023). "The New ChatGPT Can 'See' and 'Talk.' Here's What It's Like". The New York Times. Archived from the original on October 31, 2023. Retrieved October 16, 2023 – via NYTimes.com.

- ^ David, Emilia (September 20, 2023). "OpenAI releases third version of DALL-E". The Verge. Archived from the original on September 20, 2023. Retrieved September 23, 2023.

- ^ Metz, Cade; Hsu, Tiffany (September 20, 2023). "ChatGPT Can Now Generate Images, Too". The New York Times. ISSN 0362-4331. Archived from the original on September 23, 2023. Retrieved September 23, 2023.

- ^ Lawler, Richard (July 21, 2023). "ChatGPT for Android launches next week". The Verge. Archived from the original on July 22, 2023. Retrieved July 22, 2023.

- ^ Field, Hayden (July 25, 2023). "OpenAI's ChatGPT app now available for Android". CNBC. Archived from the original on July 26, 2023. Retrieved July 27, 2023.

- ^ Amadeo, Ron (January 5, 2024). "Android users could soon replace Google Assistant with ChatGPT". Ars Technica. Archived from the original on February 1, 2024. Retrieved January 6, 2024.

- ^ Torres, Jennifer (March 3, 2023). "Developers Can Now Access OpenAI's ChatGPT and Whisper APIs". CMSWire.com. Archived from the original on March 6, 2023. Retrieved March 8, 2023.

- ^ Shanklin, Will (March 1, 2023). "OpenAI will let developers build ChatGPT into their apps". Engadget. Archived from the original on March 7, 2023. Retrieved March 8, 2023.

- ^ Swant, Marty (March 3, 2023). "With developer APIs for ChatGPT and Whisper, OpenAI is opening the floodgates with a familiar playbook". Digiday. Archived from the original on March 7, 2023. Retrieved March 8, 2023.

- ^ Heath, Alex (February 27, 2023). "Snapchat is releasing its own AI chatbot powered by ChatGPT". The Verge. Archived from the original on February 28, 2023. Retrieved February 28, 2023.

- ^ "ChatGPT bug leaked users' conversation histories". BBC News. March 22, 2023. Archived from the original on March 23, 2023. Retrieved March 23, 2023.

- ^ Kan, Michael (March 22, 2023). "OpenAI Confirms Leak of ChatGPT Conversation Histories". PCMag. Archived from the original on March 22, 2023. Retrieved March 23, 2023.

- ^ "ChatGPT owner OpenAI fixes bug that exposed users' chat histories". Al Jazeera. March 23, 2023. Archived from the original on March 24, 2023. Retrieved March 23, 2023.

- ^ Metz, Rachel (March 21, 2023). "OpenAI Shut Down ChatGPT to Fix Bug Exposing User Chat Titles". Bloomberg News. Archived from the original on March 21, 2023. Retrieved March 23, 2023.

- ^ "March 20 ChatGPT outage: Here's what happened". openai.com. Archived from the original on March 28, 2023. Retrieved March 28, 2023.

- ^ "OpenAI: Sorry, ChatGPT Bug Leaked Payment Info to Other Users". PCMAG. Archived from the original on March 28, 2023. Retrieved March 28, 2023.

- ^ Magnússon, Pétur (March 15, 2023). "Icelandic becomes ChatGPT's second language". Rúv. Archived from the original on March 31, 2023. Retrieved March 31, 2023.

- ^ "Google Translate vs. ChatGPT: Which One Is the Best Language Translator?". PCMAG. Archived from the original on June 10, 2023. Retrieved June 10, 2023.

- ^ Kaneko, Karin (July 18, 2023). "ChatGPT, Bing, Bard and DeepL: Which one offers the best Japanese-to-English translation?". The Japan Times. Archived from the original on October 4, 2023. Retrieved July 22, 2023.

- ^ Taylor, Alice (December 13, 2023). "Albania to speed up EU accession using ChatGPT". Euractiv. Archived from the original on December 24, 2023. Retrieved December 14, 2023.

- ^ Kovanovic, Vitomir (December 14, 2022). "The dawn of AI has come, and its implications for education couldn't be more significant". The Conversation. Archived from the original on January 16, 2023. Retrieved December 30, 2022.

- ^ Wiggers, Kyle (December 10, 2022). "OpenAI's attempts to watermark AI text hit limits". TechCrunch. Archived from the original on January 17, 2023. Retrieved December 30, 2022.

- ^ Edwards, Benj (February 28, 2023). "Robots let ChatGPT touch the real world thanks to Microsoft". Ars Technica. Archived from the original on March 26, 2023. Retrieved March 30, 2023.

- ^ "ChatGPT for Robotics". Microsoft Research. February 20, 2023. Archived from the original on February 24, 2023. Retrieved March 8, 2023.

- ^ Belfield, Haydn (March 25, 2023). "If your AI model is going to sell, it has to be safe". Vox. Archived from the original on March 28, 2023. Retrieved March 30, 2023.

- ^ Caballero, Ethan; Gupta, Kshitij; Rish, Irina; Krueger, David (2022). "Broken Neural Scaling Laws". International Conference on Learning Representations (ICLR), 2023.

- ^ Alex Hern; Johana Bhuiyan (March 14, 2023). "OpenAI says new model GPT-4 is more creative and less likely to invent facts". The Guardian. Archived from the original on March 15, 2023. Retrieved March 15, 2023.

- ^ Vincent, James (March 15, 2023). "OpenAI co-founder on company's past approach to openly sharing research: "We were wrong"". The Verge. Archived from the original on March 17, 2023. Retrieved March 18, 2023.

- ^ Edwards, Benj (March 14, 2023). "OpenAI's GPT-4 exhibits "human-level performance" on professional benchmarks". Ars Technica. Archived from the original on March 14, 2023. Retrieved March 28, 2023.

- ^ Lardinois, Frederic (March 14, 2023). "Microsoft's new Bing was using GPT-4 all along". techcrunch.com. Archived from the original on March 15, 2023. Retrieved March 14, 2023.

- ^ Drapkin, Aaron (November 7, 2023). "GPT-4 Turbo vs GPT-4: What Is OpenAI's ChatGPT Turbo?". Tech.co. Archived from the original on May 14, 2024. Retrieved May 13, 2024.

- ^ Field, Hayden (May 13, 2024). "OpenAI launches new AI model and desktop version of ChatGPT". CNBC. Archived from the original on May 22, 2024. Retrieved May 13, 2024.

- ^ Metz, Cade (January 10, 2024). "OpenAI Unveils App Store for Customized Versions of ChatGPT". The New York Times. Archived from the original on February 7, 2024. Retrieved January 13, 2024.

- ^ a b David, Emilia (January 10, 2024). "OpenAI's custom GPT Store is now open for business". The Verge. Archived from the original on February 18, 2024. Retrieved January 13, 2024.

- ^ Shankland, Stephen (January 10, 2024). "OpenAI's GPT Store Now Offers a Selection of 3 Million Custom AI Bots". CNET. Archived from the original on February 8, 2024. Retrieved January 13, 2024.

- ^ "Introducing the GPT Store". OpenAI. January 10, 2024. Archived from the original on February 17, 2024. Retrieved January 13, 2024.

- ^ Cheng, Michelle (January 11, 2024). "AI girlfriend bots are already flooding OpenAI's GPT store". Quartz. Archived from the original on February 18, 2024. Retrieved January 13, 2024.

- ^ "ChatGPT Release - Note". OpenAI. Archived from the original on March 23, 2023. Retrieved February 8, 2023.

- ^ Achille, Belelli (June 20, 2024). "ChatGPT Come Funziona". FinanzaDigitale.

- ^ Field, Hayden (May 13, 2024). "OpenAI launches new AI model GPT-4o and desktop version of ChatGPT". CNBC. Retrieved July 23, 2024.

- ^ Franzen, Carl (July 18, 2024). "OpenAI unveils GPT-4o mini — a smaller, much cheaper multimodal AI model". VentureBeat. Retrieved July 21, 2024.

- ^ Heaven, Will Douglas. "The inside story of how ChatGPT was built from the people who made it". MIT Technology Review. Archived from the original on March 6, 2023. Retrieved March 6, 2023.

- ^ Simons, John (February 5, 2023). "The Creator of ChatGPT Thinks AI Should Be Regulated". Time. Archived from the original on March 8, 2023. Retrieved March 21, 2023.

- ^ Cowen, Tyler (May 23, 2023). "ChatGPT Is Also an Impressive Feat of Marketing". bloomberg.com. Archived from the original on February 18, 2024. Retrieved May 24, 2023.

- ^ Kantrowitz, Alex (December 2, 2022). "Finally, an A.I. Chatbot That Reliably Passes "the Nazi Test"". Slate. Archived from the original on January 17, 2023. Retrieved December 5, 2022.

- ^ Thompson, Derek (December 8, 2022). "Breakthroughs of the Year". The Atlantic. Archived from the original on January 15, 2023. Retrieved December 18, 2022.

- ^ a b Piper, Kelsey (December 15, 2022). "ChatGPT has given everyone a glimpse at AI's astounding progress". Vox. Archived from the original on January 19, 2023. Retrieved December 18, 2022.

- ^ Scharth, Marcel (December 5, 2022). "The ChatGPT chatbot is blowing people away with its writing skills. An expert explains why it's so impressive". The Conversation. Archived from the original on January 19, 2023. Retrieved December 30, 2022.

- ^ Levy, Steven (September 11, 2023). "Sundar Pichai on Google;s AI, Microsoft's AI, OpenAI, and ... Did We Mention AI?". Wired. Archived from the original on September 11, 2023. Retrieved September 12, 2023.

- ^ Grant, Nico; Metz, Cade (December 21, 2022). "A New Chat Bot Is a 'Code Red' for Google's Search Business". The New York Times. ISSN 0362-4331. Archived from the original on December 21, 2022. Retrieved December 30, 2022.

- ^ Alba, Davey; Love, Julia (February 6, 2023). "Google releases ChatGPT rival AI 'Bard' to early testers". Los Angeles Times. ISSN 0458-3035. Archived from the original on February 6, 2023. Retrieved February 6, 2023.

- ^ Ortiz, Sabrina (May 10, 2023). "Every major AI feature announced at Google I/O 2023". ZDNet. Archived from the original on May 10, 2023. Retrieved September 12, 2023.

- ^ Rachini, Mouhamad (December 15, 2022). "ChatGPT a 'landmark event' for AI, but what does it mean for the future of human labor and disinformation?". CBC. Archived from the original on January 19, 2023. Retrieved December 18, 2022.

- ^ Pearl, Mike (December 3, 2022). "The ChatGPT chatbot from OpenAI is amazing, creative, and totally wrong". Mashable. Archived from the original on December 10, 2022. Retrieved December 5, 2022.

- ^ Pitt, Sofia (December 15, 2022). "Google vs. ChatGPT: Here's what happened when I swapped services for a day". CNBC. Archived from the original on January 16, 2023. Retrieved December 18, 2022.

- ^ Mannix, Liam (December 13, 2022). "Is AI coming of age – or starting to reach its limits?". The Sydney Morning Herald. Archived from the original on January 7, 2023. Retrieved December 18, 2022.

- ^ Hicks, Michael Townsen; Humphries, James; Slater, Joe (June 8, 2024). "ChatGPT is bullshit". Ethics and Information Technology. 26 (2): 38. doi:10.1007/s10676-024-09775-5. ISSN 1572-8439.

- ^ Vincent, James (December 5, 2022). "AI-generated answers temporarily banned on coding Q&A site Stack Overflow". The Verge. Archived from the original on January 17, 2023. Retrieved December 5, 2022.

- ^ Vincent, James (January 5, 2023). "Top AI conference bans use of ChatGPT and AI language tools to write academic papers". The Verge. Archived from the original on January 17, 2023. Retrieved January 6, 2023.

- ^ Curry, Rachel (June 13, 2023). "Samsung among companies starting to draft ChatGPT policies for workers". CNBC. Archived from the original on June 14, 2023. Retrieved June 15, 2023.

- ^ a b Cain, Sian (January 16, 2023). "'This song sucks': Nick Cave responds to ChatGPT song written in the style of Nick Cave". The Guardian. Archived from the original on January 18, 2023. Retrieved January 17, 2023.

- ^ Cave, Nick (January 16, 2023). "I asked Chat GPT to write a song in the style of Nick Cave, and this is what it produced. What do you think?". The Red Hand Files. Issue #218. Archived from the original on January 20, 2023. Retrieved January 20, 2023.

- ^ Sparrow, Jeff (January 20, 2023). "Are AI-generated songs a 'grotesque mockery' of humanity or simply an opportunity to make a new kind of music?". The Guardian. Archived from the original on February 3, 2023. Retrieved January 20, 2023.

- ^ Chow, Andrew; Perrigo, Billy (February 16, 2023). "The AI Arms Race Is On. Start Worrying". Time. Archived from the original on February 19, 2023. Retrieved March 21, 2023.

- ^ Davidson, Helen (February 23, 2023). "'Political propaganda': China clamps down on access to ChatGPT". The Guardian. Archived from the original on June 14, 2023. Retrieved June 15, 2023.

- ^ Lau, Chris (May 9, 2023). "Chinese police detain man for allegedly using ChatGPT to spread rumors online". CNN. Archived from the original on December 26, 2023. Retrieved December 26, 2023.

- ^ Feng, Coco (December 29, 2023). "ChatGPT-aided ransomware in China results in four arrests". South China Morning Post. Archived from the original on February 4, 2024. Retrieved January 2, 2024.

- ^ He Qitong; Li Dongxu (May 31, 2024). "Young Chinese Have Almost No Concerns About AI, Survey Finds". Sixth Tone.

- ^ "ChatGPT banned in Italy over privacy concerns". BBC News. March 31, 2023. Archived from the original on March 31, 2023. Retrieved March 31, 2023.

- ^ Borrelli, Silvia Sciorilli; Murgia, Madhumita (March 31, 2023). "Italy temporarily bans ChatGPT over privacy concerns". Financial Times. Archived from the original on March 31, 2023. Retrieved March 31, 2023.

- ^ "ChatGPT accessible again in Italy". BBC. Archived from the original on May 1, 2023. Retrieved May 1, 2023.

- ^ Gerken, Tom. "ChatGPT: Mayor starts legal bid over false bribery claim". BBC. Archived from the original on April 7, 2023. Retrieved April 7, 2023.

- ^ Zakrzewski, Cat (July 13, 2023). "The FTC is investigating whether ChatGPT harms consumers". The Washington Post. Archived from the original on July 13, 2023. Retrieved July 13, 2023.

- ^ Tracy, Ryan; McKinnon, John D. (July 13, 2023). "ChatGPT Comes Under Investigation by Federal Trade Commission". The Wall Street Journal. News Corp. Archived from the original on July 13, 2023. Retrieved July 13, 2023.

- ^ Feiner, Lauren (July 13, 2023). "FTC investigating ChatGPT-maker OpenAI for possible consumer harm". CNBC. Archived from the original on July 13, 2023. Retrieved July 13, 2023.

- ^ "ChatGPT creator OpenAI faces US probe over libellous output". Ars Technica. Archived from the original on July 15, 2023. Retrieved July 15, 2023.